Agents are here, how we build and use them is challenging many of the foundations for building software that we established over the last few decades — and even the very idea of what a product is.

This article is a continuation of previous explorations on the theme of how AI is impacting design and product (Vibe-code designing, Evolving roles), and is based on a presentation delivered at ‘The Age of TOO MUCH’ exhibition in Protein studios, as part of London Design Week 2025.

There’s a scene in one of my favorite movies, Interstellar, where the characters are on a remote, water-covered planet. In the distance there is what initially appears to be a large landmass, but as Cooper, the main character, looks on, he realizes that they aren’t in fact mountains, but enormous waves steadily building and towering ominously over them.

With AI, it feels like we’ve had a similarly huge wave building on the horizon for the last few years. I wrote previously about how Generative AI and Vibe Coding are changing how we design. In recent months it feels like another seismic shift is underway with agentic AI. So what exactly is this wave, and how is it reshaping the landscape we thought we knew?

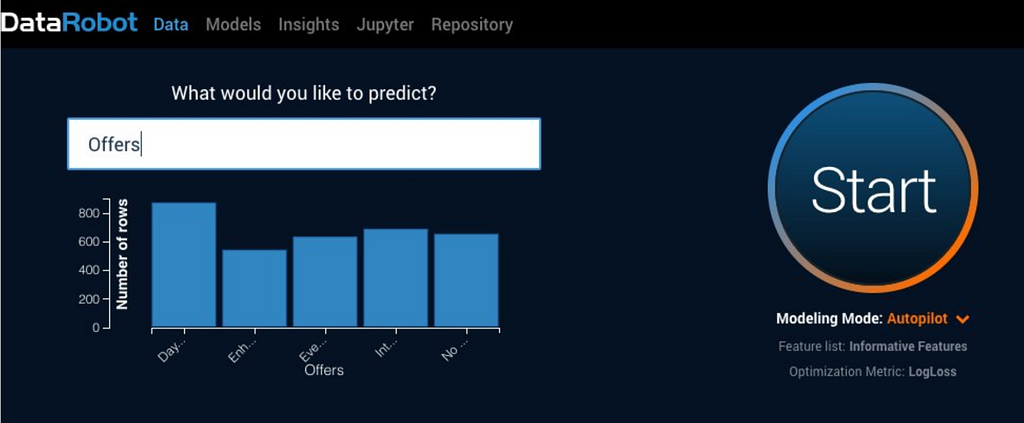

I lead the product design team at DataRobot, an enterprise platform that helps teams build, govern and operate AI models and agents at scale. From this vantage point, I’m seeing these changes reshape not just how we design, but also many long-held assumptions about what products are and how they’re built.

What’s actually changing

Agents are a fundamentally different paradigm to predictive and generative AI. What sets them apart, aside from being multimodal and capable of deep reasoning, is their autonomous nature. It sounds deceptively simple, but when software has agency — the ability to make decisions and take actions on its own — the results can be quite profound.

This creates a fundamental challenge for companies integrating AI software, which is traditionally built for deterministic, predictable workflows. Agentic AI is inherently probabilistic — the same input can produce different outputs, and agents may take unexpected paths to reach their goals. This mismatch between deterministic infrastructure and probabilistic behavior creates new design challenges around governance, monitoring, and user trust. These aren’t just theoretical concerns, they’re already playing out in enterprise environments. That’s why we partnered with Nvidia to build on their AI Factory design and delivered as agentic apps embedded directly into SAP environments, so customers can run these systems securely and at scale.

But even with this kind of hardened infrastructure, moving from experimentation to impact remains difficult. Recent MIT research found that 95% of enterprise generative AI pilots fail to deliver measurable impact, highlighting an industry-wide challenge in moving from prototype to production. Our ‘AI Expert’ service — where specialised consultants work directly with customers to deploy and run agents — delivers outstanding results through personalized support. To extend this level of guidance to a broader customer base, we needed to develop scalable approaches that could address complexity barriers at scale.

Moving from AI experimentation to production involves significant technical complexity. Rather than expecting customers to build everything from the ground up, we decided to flip the offering and lead instead with a series of agent and application templates that give them a head start.

To use a food analogy, instead of handing customers a pantry full of raw ingredients (components and frameworks), we now provide something closer to ‘HelloFresh’ meal kits: pre-scaffolded agent and application templates with prepped components and proven recipes that work out of the box. These templates codify best practices across common customer use cases and frameworks. AI builders can clone them, then swap out or modify components using our platform or in their preferred tools via API.

This approach is changing how AI practitioners use our platform. One significant challenge is creating front-end interfaces that consume the agents and models — apps for forecasting demand, generating content, retrieving knowledge or exploring data.

Larger organisations with dedicated development teams can handle this easily. But smaller organisations often rely on IT teams or our AI experts to build these interfaces, and app development isn’t their primary skill.

We mitigated this by providing customisable reference apps as starting points. These work if they are close to what you need, but they’re not straightforward to modify or extend. Practitioners also use open-source frameworks like Streamlit, but the quality of these often falls short of enterprise requirements for scale, security and user experience.

To address this, we’re exploring solutions that use agents to generate dynamic applications — dashboards with complex user interface components and data visualizations, tailored to specific customer needs, all using the DataRobot platform as the back-end. The result is that users can generate production-quality applications in days, not weeks or months.

https://medium.com/media/1c5c875292b4c38002fa61e15fab4140/href

This shift towards autonomous systems raises a fundamental question: how much control should we hand over to agents, and how much should users retain? At the product level, this plays out in two layers: the infrastructure AI practitioners use to create and govern workflows, and the front-end apps people use to consume them. Our customers are now building both layers simultaneously — guidance agents configure the platform scaffolding while different generative agents build the React-based applications that sit on top.

These aren’t prototypes — they’re production applications serving enterprise customers. AI practitioners who might not be expert app developers can now create customer-facing software that handles complex workflows, rich data visualization, and business logic. The agents handle React components, layout and responsive design, while the practitioners focus on domain logic and user workflows.

We are seeing similar changes in other areas too. Teams across the organisation are using new AI tools to build compelling demos and prototypes using tools like V0. Designers are working alongside front-end developers to contribute production code. But this democratization of development creates new challenges; now that anyone can build production software, the mechanisms for ensuring quality and scalability of code, user experience, brand and accessibility need to evolve. Instead of checkpoint-based reviews, we need to develop new systems that can scale quality to match the new pace of development.

Learning from the control paradox

There are lessons from our AutoML (automated machine learning) experience that apply here. While AutoML workflows helped democratize access for many users, some experienced data scientists and ML engineers felt control was being taken away. We had automated the parts they found most rewarding — the creative, skilled work of selecting algorithms and crafting features — while leaving them with the tedious infrastructure work they actually wanted to avoid.

We’re applying this lesson directly to how we build agentic applications. Just as AutoML worked when it automated feature engineering but not always model interpretation, our customers will succeed when agents handle UI implementation while AI/ML experts retain control over the agentic workflow design. The agents automate what practitioners don’t want to do (component wiring, state management) while preserving agency over what they do care about (business logic, user experience decisions).

Now, with agentic AI, this tension plays out at a much broader scale with added complexity. Unlike the AutoML era when we primarily served data scientists and analysts, we now target a broader range of practitioners including App developers who might be new to AI workflows, along with the agents themselves as end users.

Each group has different expectations about control and automation. Developers are comfortable with abstraction layers and black-box systems — they’re used to frameworks that handle complexity under the hood, but they still want to debug, extend, and customise when needed. Data scientists still want explainability and intervention capabilities. Business users just want results.

But there’s another user type we’re designing for: the agents themselves. This represents a fundamental shift in how we think about user experience. Agents aren’t just tools that humans use — they’re collaborative partners that need to interact with our platform, make decisions, and work alongside human practitioners.

When we evaluate new features now, we ask: will the primary user be human or agent? This changes everything about how we approach information architecture, API design, and even visual interfaces (if required). Agents need different types of feedback, different error handling, and different ways to communicate their reasoning to human collaborators.

Looking ahead, it’s possible agents may emerge as primary users of enterprise platforms. This means designing for human-agent collaboration rather than just human-computer interaction, creating systems where agents and humans can work together effectively, each contributing their strengths to the workflow.

From designing flows to architecting systems

These changes challenge fundamental assumptions about what a product is. Traditionally, products are solutions designed to solve specific problems for defined user groups. They usually represent a series of trade-offs: teams research diverse user needs, then create single solutions that attempt to strike the best balance of multiple use cases. This often means compromising on specificity and simplicity to achieve broader appeal.

Generative AI has already begun disrupting this model by enabling users to bypass traditional product design and development processes entirely. Teams can now get to an approximation of an end result almost instantaneously, then work backward to refine and perfect it. This compressed timeline is reshaping how we think about iteration and validation.

But agentic AI offers something more fundamental: the ability to generate products and features on demand. Instead of static experiences that try to serve a broad audience, we can create dynamic systems that generate specific solutions for specific contexts and audiences. Users don’t just get faster prototypes — they get contextually adaptive experiences that reshape themselves based on individual needs.

This shift changes the role of design and product teams. Instead of executing individual products, we become architects of systems that can create products. We curate the constraints, contexts, and components that agents use to generate experiences while maintaining brand guidelines, product principles, and UX standards.

But this raises fundamental questions about interaction design. How do affordances work when interfaces are generated on demand? Traditional affordances — visual cues that suggest how an interface element can be used — rely on consistent patterns that users learn over time. Interestingly, AI tools like Cursor, V0, and Lovable address this challenge by leveraging well-established UX frameworks like Tailwind and ShadCN. Rather than creating novel patterns that users need to learn, these tools generate interfaces using robust, widely-adopted design systems that provide familiar starting points. When agents generate interfaces contextually using these established frameworks, users encounter recognizable patterns even when the specific interface is new.

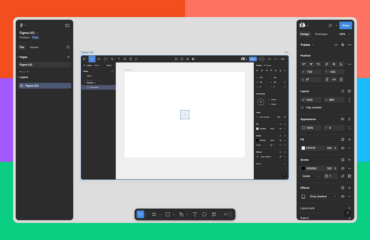

At DataRobot, we’ve approached this challenge by systematizing our design process and standards as agent-aware artifacts. We’ve converted our Figma design system into machine-readable markdown files that agents can consume directly. Using Claude, we translated our visual design guidelines, component specifications, and interaction principles into structured text that can be dropped as context into AI tools like Cursor, V0, and Lovable.

This approach allows us to maintain design quality at scale. Instead of manually reviewing every generated interface, we encode our design standards upstream, ensuring that agents generate consistent, accessible, and brand-appropriate experiences by default.

We’re already seeing this in action within DataRobot itself. Our AI Experts use these agent-aware design artifacts when building agentic applications, maintaining design consistency through our systematized guidelines while focusing on the unique business logic and user workflows.

What this means for product & design leaders

I previously wrote about how the boundaries between disciplines are blurring. What shape the product triad will take, or if it remains a triad at all, is unclear. While it’s likely that design will absorb many front-end development tasks (and vice-versa), and some PMs will take on design tasks, I don’t think any roles will disappear entirely. There will always be a need for specialists; while individuals can indeed do a lot more than before, there is only a certain amount of context that we can all retain.

So while we might be able to execute more, we still need people who can go deep on complex problems along with a level of craft that becomes increasingly valuable as a differentiator. In a world where anyone can create anything, the quality of execution and depth of understanding that comes from specialization will be what separates good work from exceptional work.

The companies that are going to distinguish themselves are the ones that show their craft. That they show their true understanding of the product, the true understanding of their customer, and connect the two in meaningful ways.

— Krithika Shankarraman (Product, OpenAI)

As these boundaries blur and new capabilities emerge, it’s worth remembering what remains constant. The hard problems remain hard:

- Understanding people and their needs within complex contexts.

What unmet needs are we addressing? - Building within interdependent systems and enterprise constraints.

Will this work with existing architectures? - Aligning technical capabilities to business value.

Is this solving a problem that matters?

Our role as design leaders is evolving from crafting individual experiences to architecting systems that generate experiences. We’re evolving from designing screens to designing systems that can make contextual decisions while maintaining design integrity.

This changes our methodology fundamentally. Instead of designing for personas or generalised scenarios, we’re designing systems that adapt to individual contexts in real-time. Rather than creating single user journeys, we’re building adaptive frameworks that change pathways based on user intent and behavior.

User research also evolves: we still need to understand human needs, but now we must translate those insights into rules and constraints that agents can interpret. The challenge isn’t just knowing what users want, but encoding that knowledge to maintain design quality across infinite interface variations.

https://medium.com/media/0adf05b68982681f7579e5f9734e2af3/href

This fundamental truth doesn’t change — but our methods for translating human understanding into actionable systems do. The uniquely human work of developing deep contextual understanding becomes more valuable, not less, as we learn to encode that wisdom for AI systems to use effectively.

Design quality in an agent-first world

This shift toward agent-generated experiences creates new design challenges. If agents are creating interfaces on demand, how do you maintain coherence across an organisation? How do you ensure accessibility compliance? How do you handle edge cases that training data didn’t capture?

We believe that part of the answer lies in creating foundational artifacts that both humans and agents can consume. At DataRobot, we are currently exploring:

- Making documentation agent-aware using formats like MCP, agents.md and llms.txt.

- Converting our design system into foundational markdown files that codify principles and patterns, for use in AI development tools.

- Creating automated checks for UI language, accessibility standards, and interaction patterns.

This approach enables others in our organisation to build compelling applications with AI tools while adhering to our design system and brand consistency. But here’s the crucial insight: while these AI-generated applications might look impressive, the polish can mask underlying UX challenges. As Preston notes:

https://medium.com/media/1deda45b93d917a65c099af08ac55b7e/href

AI tools excel at execution, but they don’t replace the difficult UX work required to ensure you’re executing the right thing.

This creates a new challenge for design teams: when everyone is a builder, how do we ensure we build the right things and ensure we meet quality standards? We’ve struggled with knowing when to lean in. There are times, like creating demos or throwaway prototypes, when it’s fine for design to be less involved. But there are critical moments when our involvement is important, otherwise poor quality experiences can ship to production. Our customers don’t care about how or who created the products they interact with.

The key is catching issues as far upstream as possible. This means the documentation and enablement materials that guide how people use and customise our templates have become the new ‘products’ our design team is responsible for. By creating thorough agent-aware guidelines and design system documentation, we can ensure higher quality output at scale.

But we still need quality checks without slowing down the process too much. We’re still learning how to balance speed with standards — when to trust the system we’ve built and when human design judgment is a must have.

Riding the wave

The last few years have felt like a rollercoaster because they have been. But I believe our job as designers is to lean into uncertainty, to make sense of it, shape it, and help others navigate it.

Like Cooper in Interstellar, we’ve recognised that what seemed like distant mountains are actually massive waves bearing down on us. The question isn’t whether the wave will hit, it’s already here. The question is whether we’ll be caught off guard or whether we’ll have prepared ourselves to harness its power.

Here’s what we’ve learned so far at DataRobot, for anyone navigating this transition:

- Embrace the change & challenge orthodoxies

Try new tools and workflows outside your traditional lane. As roles blur, staying relevant means expanding your capabilities - Build systems, not just products

Focus on creating the foundations, constraints, and contexts that enable good experiences to emerge, rather than crafting every detail yourself - Focus on the enduring hard things

Double down on the uniquely human work of understanding needs, behaviours, and contexts that no algorithm can fully grasp. - Exercise (your) judgment

Use AI for speed and capability, but rely on your experience and values to decide what’s right.

AI doesn’t make design irrelevant. It makes the uniquely human aspects of design more valuable than ever. The wave is here, and those who learn to harness it will find themselves in an incredibly powerful position to shape what comes next. This isn’t about nostalgia for how design used to work, it’s about taking an optimistic stance and embracing what’s possible with these technologies, doing things and going further than we ever could before. As Andy Grove put it perfectly:

Don’t bemoan the way things were, they will never be that way again. Pour your energy — every bit of it — into adapting to your new world, into learning the skills you need to prosper in it, and into shaping it around you.

John Moriarty leads the design team at DataRobot, an enterprise AI platform that helps AI practitioners to build, govern and operate agents, predictive and generative AI models. Before this, he worked in Accenture, HMH and Design Partners.

From products to systems: The agentic AI shift was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.